Emerging Patterns for Designing in the GAI Gold Rush

The genie is out of the bottle

Are you AI optimistic or AI pessimistic? You can get very worked up on your feelings when envisioning this future. I know I have. But regardless of how you feel, AI is here to stay. The technology is so powerful and disruptive that those who refuse to use it will be left behind. The world has seen this technology and it can’t be unseen.

As with any emerging technology, there is a need for ethical guidelines to regulate its usage. Some may suggest an outright ban until we figure out how to regulate it, but that would only hurt us in the long run. There is no advantage to banning this tech while other parties are quick to invest and accelerate.

Consider education: Most schools have reacted to students' use of AI by attempting to track them down and penalize them. But what will happen once they are finished with school? In the real world, they will need to utilize these tools to achieve their success. We should be equipping the next generation to solve the right problems using these emerging tools.

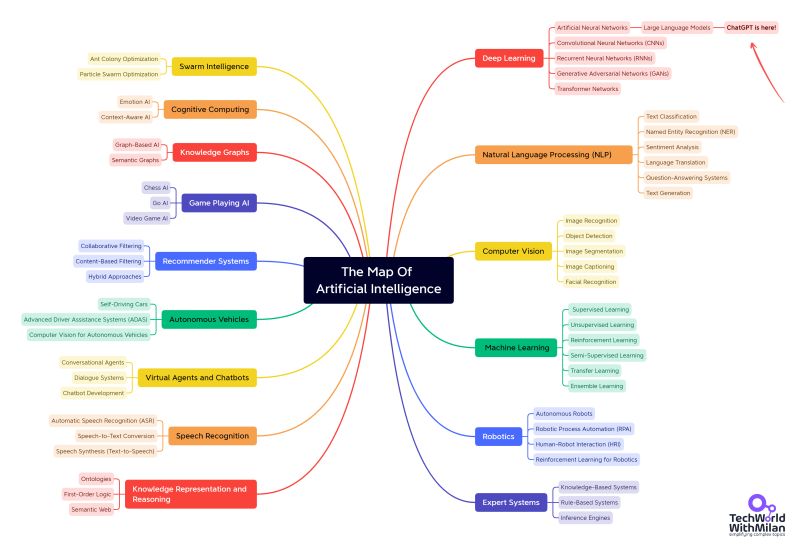

Getting started: Familiarize with an AI taxonomy

There is a lot of confusion between Narrow AI and AGI. Narrow AI, also known as weak AI, refers to task-specific tools that are powerful yet incomplete. On the other hand, AGI (Artificial General Intelligence), often portrayed as sentient robots like in the Terminator movies, is still theoretical and not yet achieved. Since we tend to anthropomorphize AI, especially in chat interfaces and physical robots, people often mistake Narrow AI for AGI. This confusion leads to fear and aversion when introduced to our new AI future.

You’re probably starting with AI because of the sudden new interest in LLMs (Large Language Models). Most notably, OpenAI’s ChatGPT. There are many types and combinations of AI tech, and most of them were already here before the ChatGPT craze began. OpenAI’s recent success is due to introducing users to a powerful chat interface (for free) and democratizing the use of this tech to the general public. Chances are that your mom has even dabbled with ChatGPT finding recipes, asking biased philosophical questions, or creating hip-hop lyrics revolving around certain political figures…

You may have noticed that LLMs do not all perform the same. They can be trained to work better for specific domains/industries such as law (CaseHOLD), biomedical (BioBert), Finance (BloombergGPT), coding (StarCoder), medical (Med-PaLM), climate (ClimateBERT), and more. We will see these models grow, compete and diversify.

SLM (Small Language Models) use less training data, but are also intended for a narrower selection of use cases. They are so small in file size that they can run locally on a device! Google’s been doing this for a while now on their pixel phones. This means SLMs are more secure and they are becoming more and more powerful.

Retrieval-Augmented Generation (RAG) incorporates a retriever component to identify relevant information from a knowledge database and a generator component to produce the final response. The Retriever component searches the knowledge database for relevant information and retrieves a subset data that is deemed to be most relevant to the query. These retrieved passages are then passed to the generator component of the model, which generates a response based on the input query and the retrieved information.

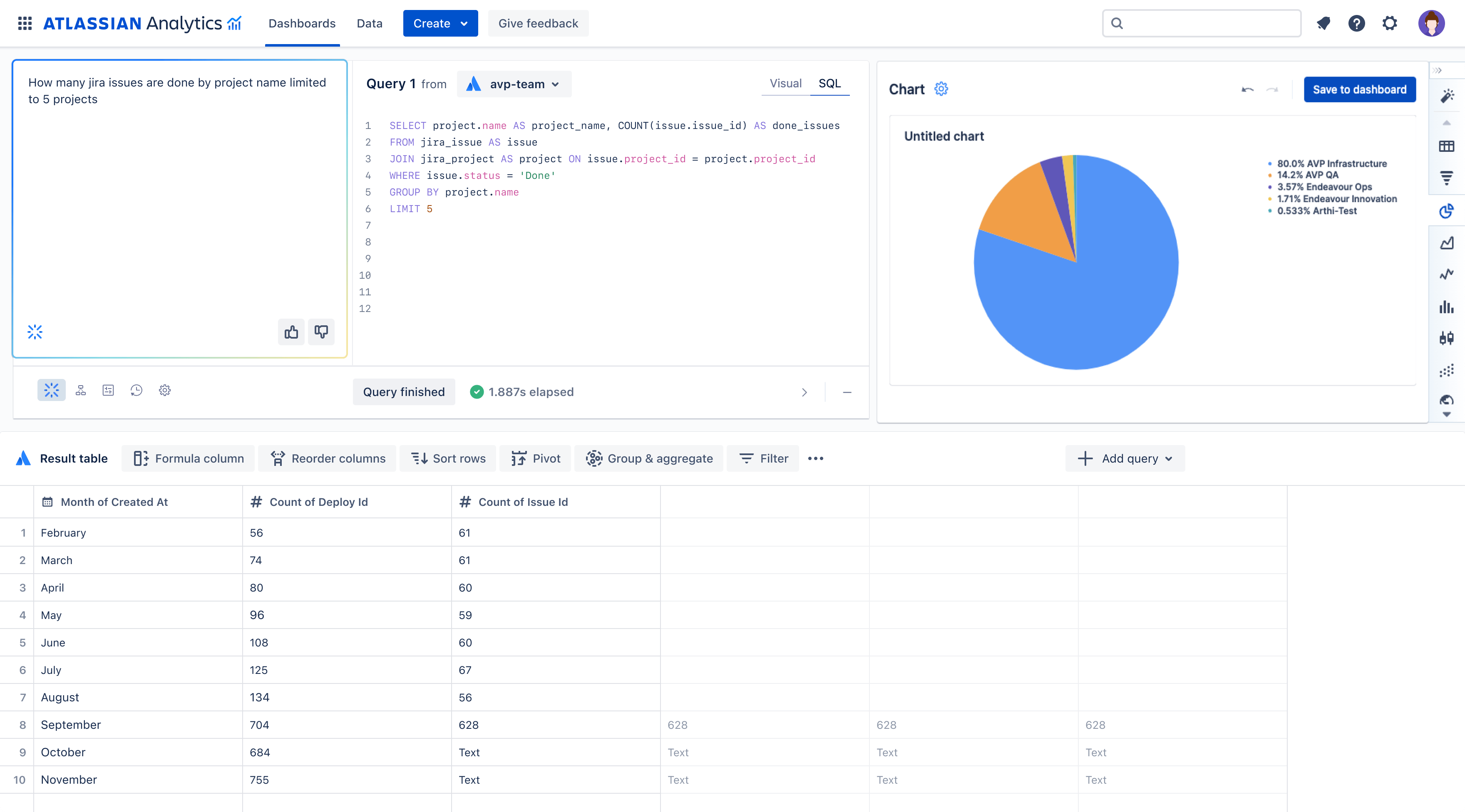

Text-to-SQL AI, or Text-to-Structured Query Language AI, refers to a type of artificial intelligence that translates natural language questions or queries into structured query language (SQL) queries that can be executed against a database. Text-to-SQL AI is particularly useful in scenarios where users need to interact with databases using natural language queries, such as data analysis, reporting, or information retrieval tasks. It simplifies the process of querying databases by allowing users to express their queries in natural language, without requiring them to have knowledge of SQL syntax or database schema.

The Map of Artificial Intelligence, Dr Milan Milanović

The Map of Artificial Intelligence, Dr Milan Milanović

If you’re new to this space, here’s my point to understanding AI taxonomy, chat isn’t the only solution and it’s not always the best interface.

Your team might be excited about their latest ChatGPT experiences, but newcomers need to familiarize themselves, even at a very high level, with the AI toolbox.

Chat can be great for achieving simple and familiar workflows, but it can be terrible for unfamiliar workflows. I have found that chat is not good for browsing. Imagine shopping at a local Walmart, but having to shop through a window in the wall of the building while a worker asks, "What do you want? I'll go get it." Retail thrives off of browsing. Navigation is a nightmare in Chat. Picture yourself interacting with an IVR phone system and having to be presented with menu items one by one.

Voice input/audio, often accompanied by chat, is a notoriously inaccurate form of input. You would be extremely hesitant to purchase a $500+ airline flight using a voice assistant. You need stronger certainty in understanding and communication. You can multitask visually (Think a laptop desktop with many windows), but imagine hearing multiple audio responses simultaneously.

Memorable Cortana voice input presentation failure

Our brain can process faster and more accurately when combining our senses. Take the innovative Rabbit R1 AI device. With some simple visual confirmation, you can quickly communicate and make trustworthy decisions. The Rabbit team realized chat/voice was not enough and incorporated a touch screen for visual confirmation and clear decision-making.

Visual and touch confirmation after a voice command

Visual and touch confirmation after a voice command

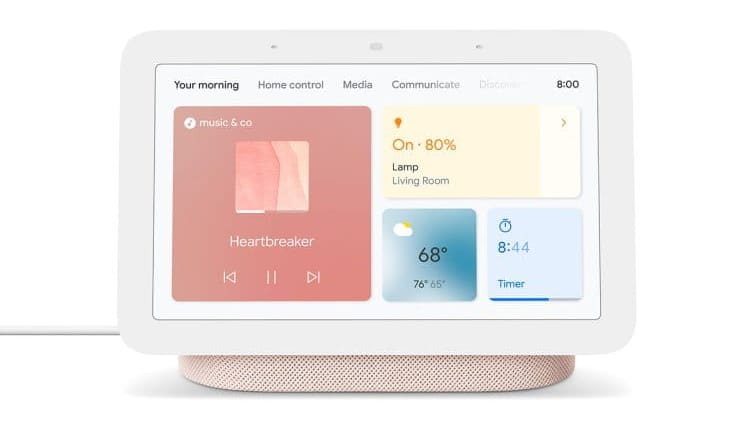

Google and Amazon have both realized how much better their voice assistants are when they incorporate a visual element. They display information, wayfinding, capabilities, controls, and multitasking.

Visual layer alongside voice commands on Google's Nest Hub

Visual layer alongside voice commands on Google's Nest Hub

Even when working with other humans, we tend to rely on visual artifacts. We have screens to confirm a purchase total at a coffee shop, the barista may then print a physical receipt. We sign the receipt to clearly communicate our authorization. You could ask where the bathroom is located using your voice, or you could just rely more easily on visual signage.

You can’t simply add a chatbot and expect a great user experience when integrating AI into your application.

Some common UX patterns with AI

Identifiers

Identifiers provide visual cues to communicate where AI is being used. Designers should aim to make AI processes and recommendations obvious. Identifiers tell us what content is generated from AI and which is written by humans.

Icons

The current trends seem to be driven by familiar emojis. I understand the feelings of explaining this new tool as "magic", however, how many of our past inventions have felt so incredible they were unexplainable? I predict this magic metaphor might evolve into something more descriptive.

- ✨ sparkling stars

- 🪄 Magic wands

- 🔮 crystal balls or mysterious orbs

- 🤖 robots

Color

Contrasting colors are being used to differentiate AI in UI. There are trends emerging, and these trends can help users quickly identify.

- Purple (Most dominant)

- Green (Popularized from ChatGPT)

- Gradients (Linear, angled, radial, clipping)

- Shifting colors

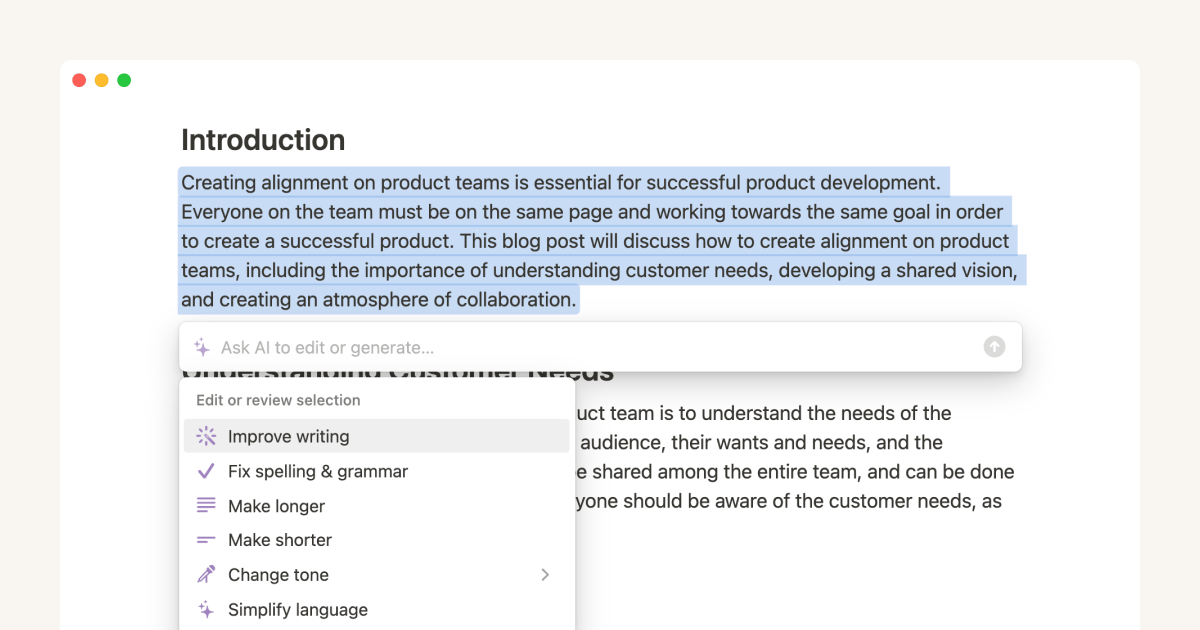

Notion now has multiple AI features, so they lean on purple coloring

Notion now has multiple AI features, so they lean on purple coloring

Animation

Animations can be combined with iconography and color to signify AI at work.

Atlassian uses animation showing AI in progress

Atlassian uses animation showing AI in progress

Brand Personas

Brand identifiers are another way to separate humans from AI. Many times brands use personification when referring to AI with the intent of more natural interactions.

- Siri

- Google Asisstant

- Alexa

- Bixby

- Jasper

Github's CoPilot animated character

Github's CoPilot animated character

Wayfinders

Because interfaces are simplified, users need guidance and instruction on what they can do.

Nudges

Nudges visibly present clues to actions users can take.

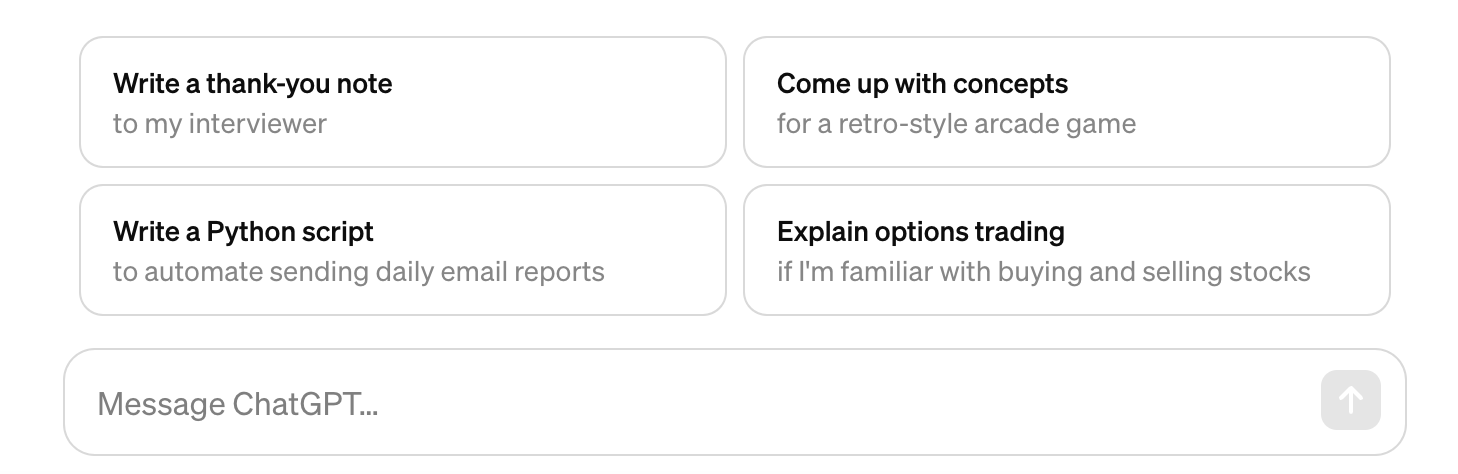

ChatGPT providing some usecases for their tool

ChatGPT providing some usecases for their tool

Suggestions

Suggestions are similar to nudges but generally used like placeholders in chat interfaces

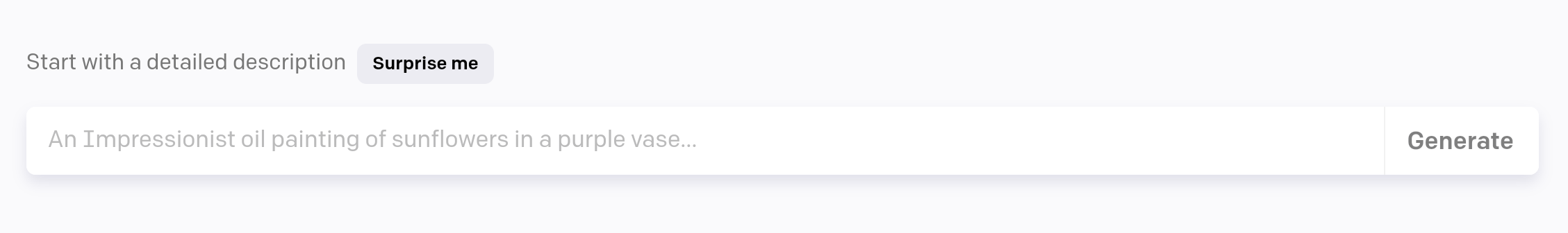

DallE suggesting how to get started generating an image

DallE suggesting how to get started generating an image

Templates

Templates launch users forwards with common use cases.

FigJam lets you get started with common maps and exercises

FigJam lets you get started with common maps and exercises

Prompts

Prompts are designed to elicit responses or creations from the AI model, which can include text, images, music, or other forms of content.

Generate content (Auto-fill)

Generate content into one or multiple fields in a spreadsheet. This could be automatic or manual depending on user needs.

Coda's autofill generating database content on the fly

Coda's autofill generating database content on the fly

Open Chat

Open text fields are the predominant way we’ve encountered conversational AI. Open-ended text boxes still have some issues like misuse, security, and a lack of understanding regarding capability. Infinite possibilities can create paralysis and leave users scratching their heads. Prompting is a new skill users will need to develop, in a similar way we learned to “Google” our questions. Some are better or worse at searching the web using search engines.

Anthropic's Claude has an open text field with no wayfinders

Anthropic's Claude has an open text field with no wayfinders

Blend

Without having to understand the prompts that were used, a user can combine results.

Midjourney's image blend feature combines two images as a prompt

Midjourney's image blend feature combines two images as a prompt

Synthesis

Users can submit multiple objects to be sorted, categorized, organized, or summarized.

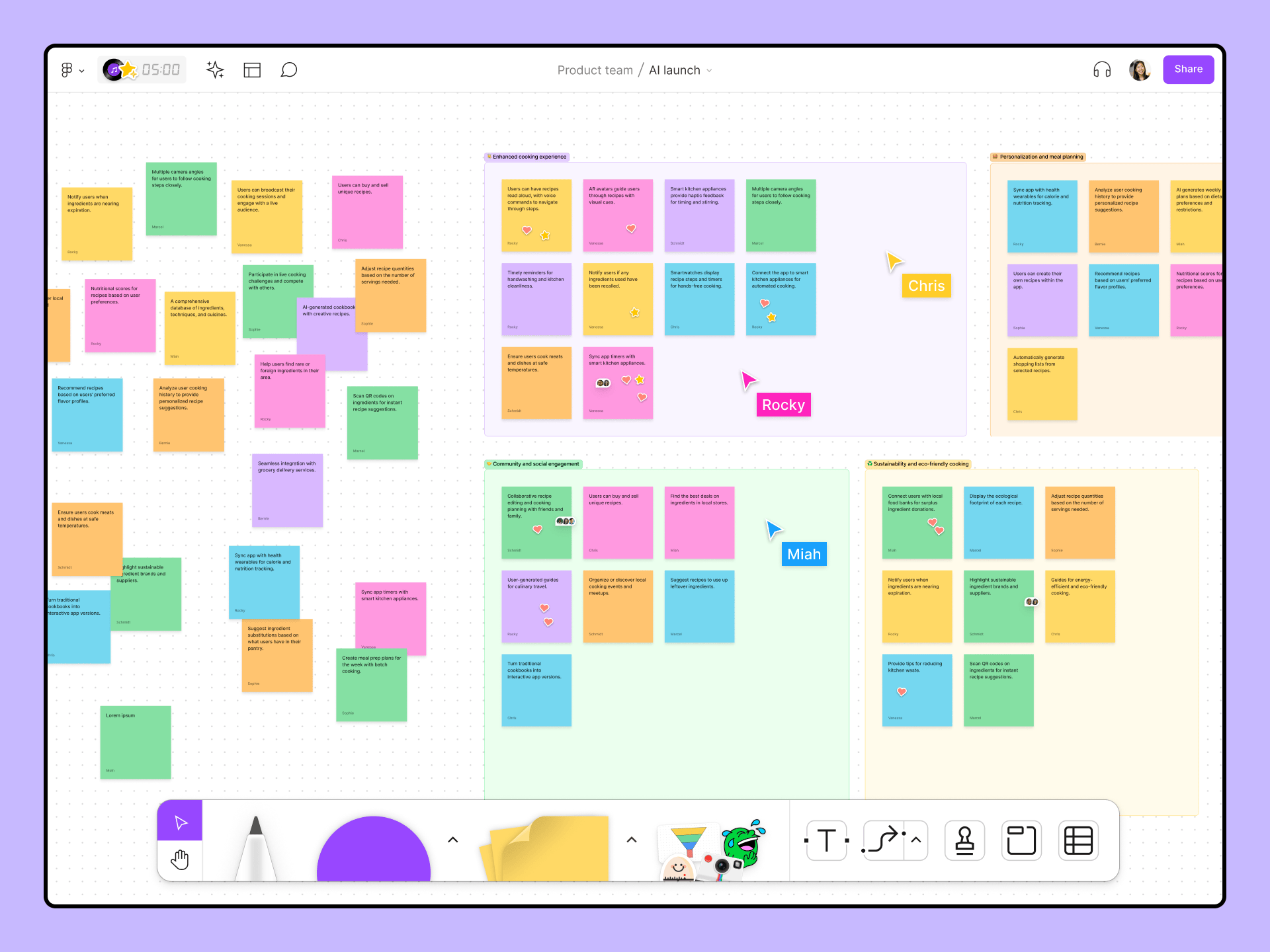

Figjam sorts and summarizes groups of sticky notes

Figjam sorts and summarizes groups of sticky notes

Tuning

Spoken languages are a much less accurate language for programming. It becomes difficult to be specific when describing what we want using our words. We try to fix this by adding many more words but it doesn’t always work out how we picture it in our mind. Back to the genie in the bottle illustration, Daniel Stillman author of "Good Talk, How to Design Conversations That Matter" explains how in all the traditional narratives about genies, the genie and the person who rubbed the lamp always have communication errors that tend to be catastrophic.

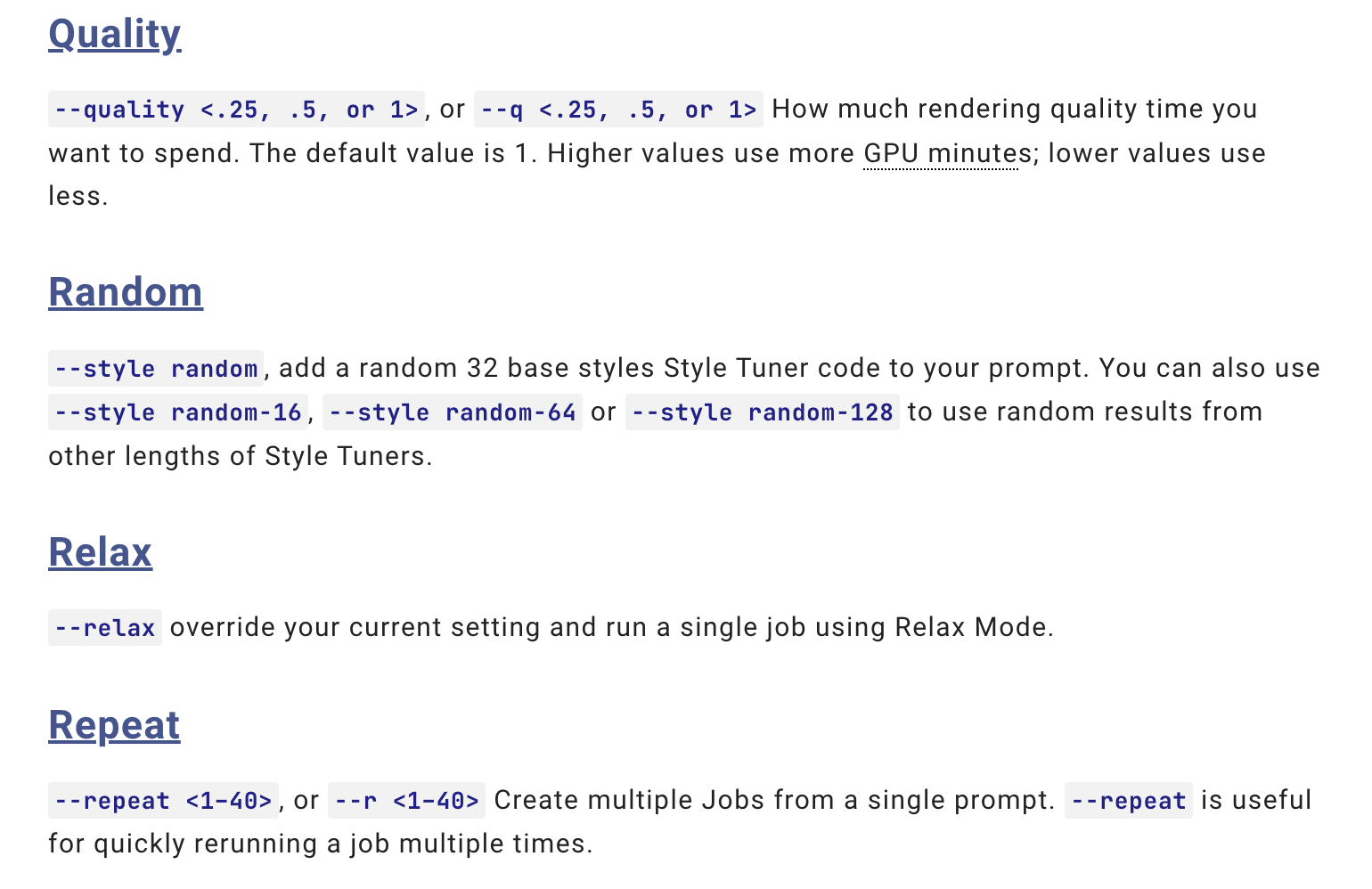

Parameters/Filters

To help with the lack of specificity in our everyday language, AI tools have been adding coded parameters you can add with your natural language to help be more specific. These parameters are made more accessible through the use of filters, which are visual and clickable options displayed on the interface. So you may wonder, are we just working back to programming? Some may question whether this is simply a return to traditional programming. However, I would argue that we are moving towards a more optimal balance between natural language and coded parameters.

Midjourney develops parameters for prompting

Midjourney develops parameters for prompting

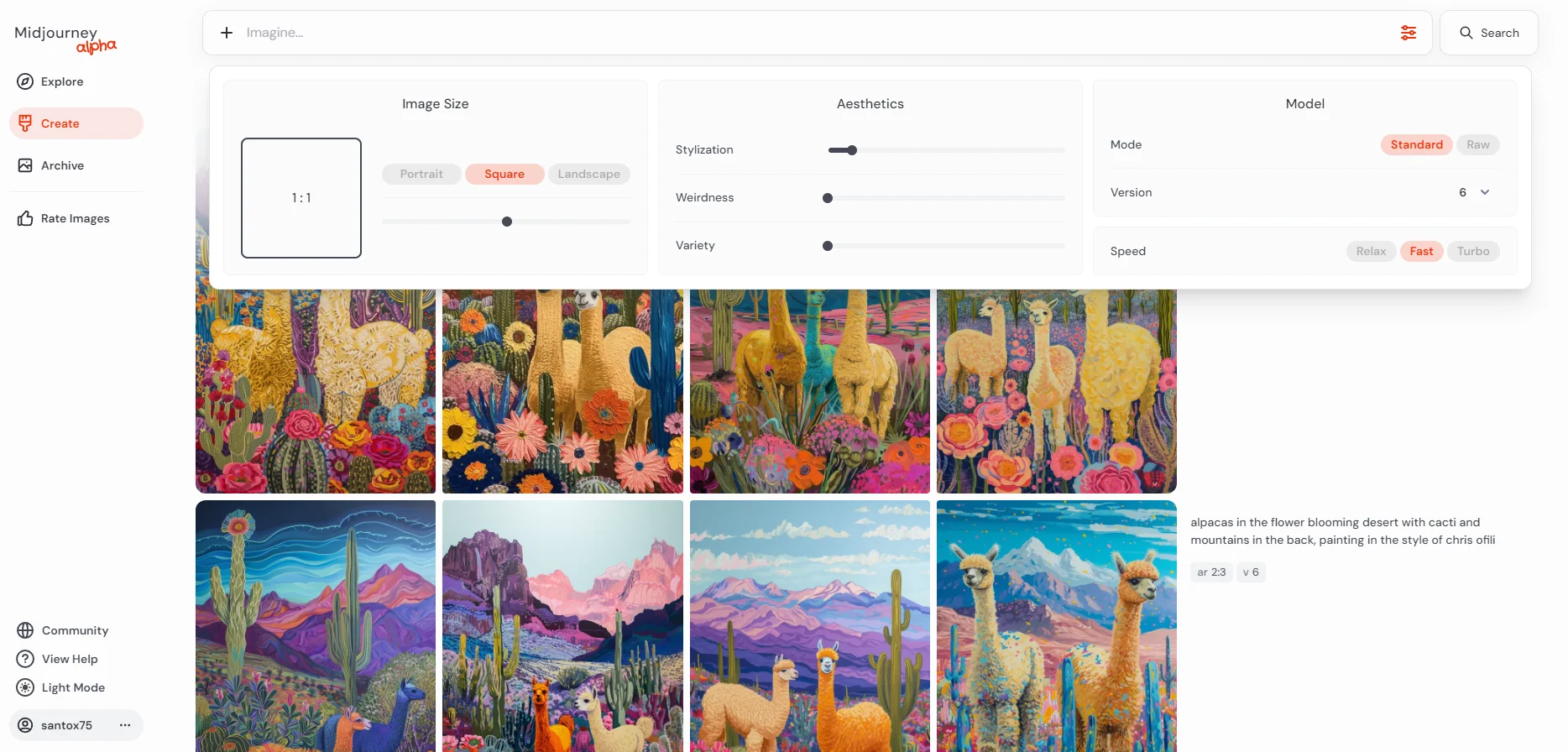

Midjourney's new website turned these parameters into user friendly filters

Midjourney's new website turned these parameters into user friendly filters

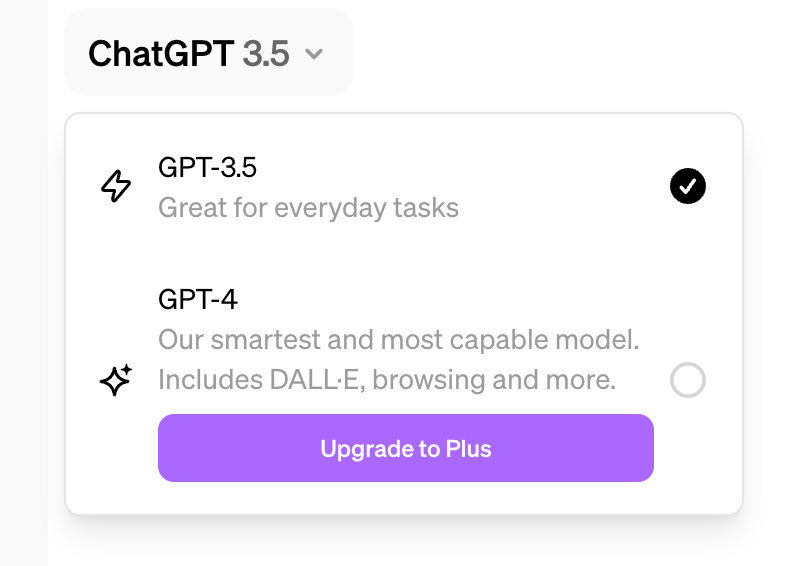

Model Management

Users will want to choose their models for pricing, performance, accuracy, privacy, speed, etc.. They may even want to switch models in one session or compare output results.

ChatGPT's model switcher

ChatGPT's model switcher

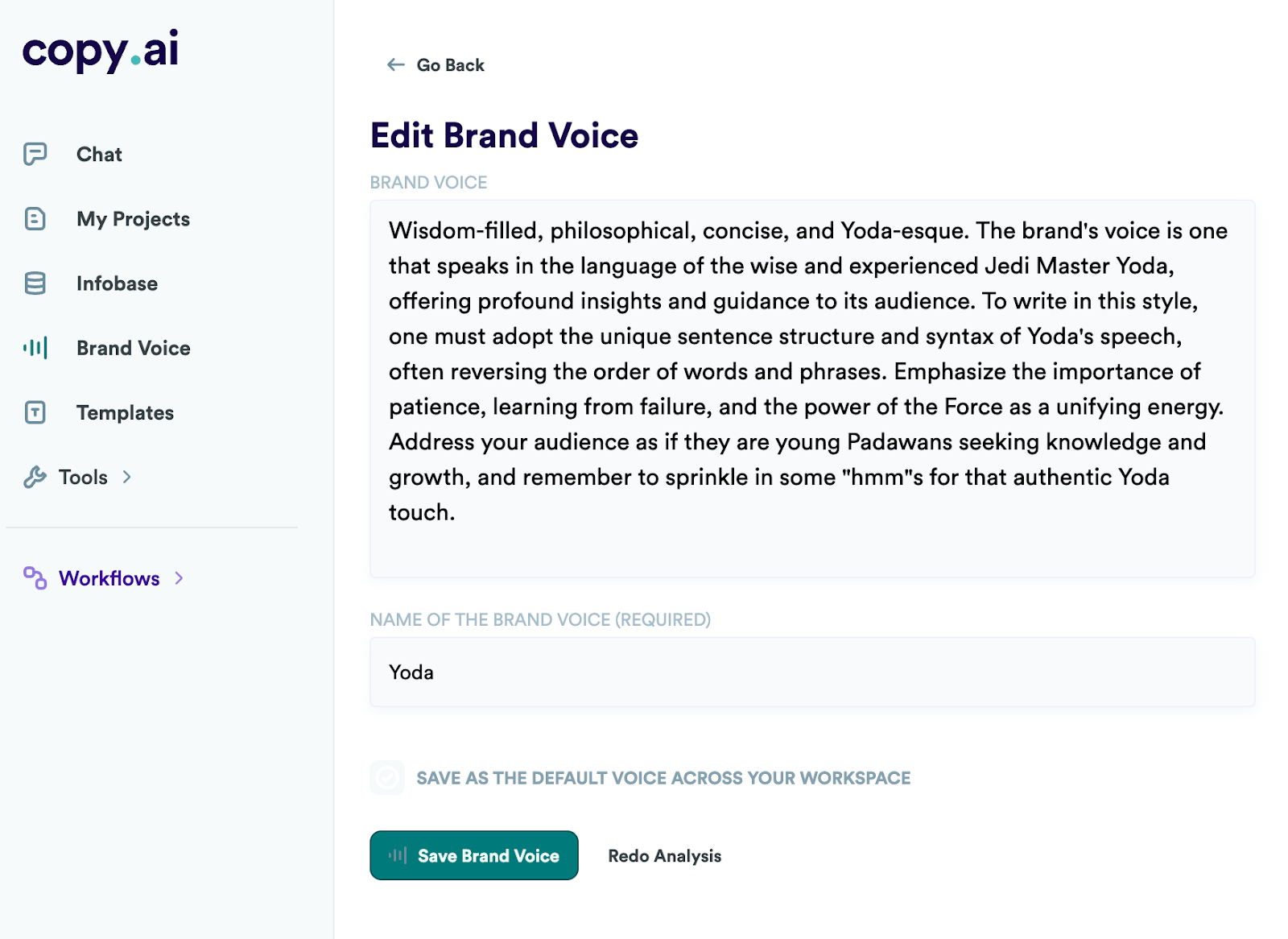

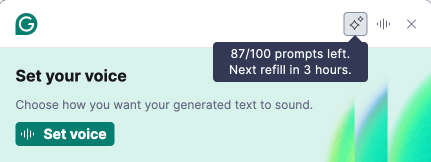

Personal voice/Tone

Defining the tone for a professional or brand makes their content sound more authentic. Some tools even go so far as to allow you to store key terms or phrases.

CopyAI let's you control brand tone

CopyAI let's you control brand tone

Personalization

Designers should leverage AI algorithms to create personalized experiences that adapt to individual user needs in real time. This does require knowing information about this user.

Google's Gemini has plenty of personal knowledge to work from

Google's Gemini has plenty of personal knowledge to work from

Other Indicators

Loading Indicators

If performing complicated operations that take a while, it’s important to communicate to the user that the operation is in progress. Use this opportunity to explain your business value, market your shiny new AI, remind them of any legal requirements, or include some brand personality. Encourage users to be patient using progressive loading, skeleton loaders, and perceived performance.

Google's Gemini uses animation, skeleton loaders, and progressive loading

Google's Gemini uses animation, skeleton loaders, and progressive loading

Usage Indicators

Business models and licensing are changing every day. Some products have a freemium experience but will eventually require payment for these shiny new products that tend to cannibalize existing products. Usage indicators help users budget their usage so the experience doesn’t suddenly turn off at the end of their allowance. I’ve recently been thinking of these services as a “utility” you would pay for your home: Water, electricity, internet, AI. With most utilities, you can manage your usage to save costs or make wiser spending decisions.

Grammarly allows users to budget usage by showing remaining balance

Grammarly allows users to budget usage by showing remaining balance

Trust Indicators

Users need an understanding of how AI algorithms make decisions and recommendations. Providing transparency instills trust and confidence in the system.

Caveats

Caveats alert users to any deficiencies or potential risks associated with the model or the technology in general. Users need to know that AI tools do not always provide accurate results.

Notion has a strong warning against possible accuracy mistakes

Notion has a strong warning against possible accuracy mistakes

Footprints/History

Footprints trace the user from the sources used to the results. They reveal what’s going on “Under the hood” to produce the results.

Atlassian shows your text query alongside sql code

Atlassian shows your text query alongside sql code

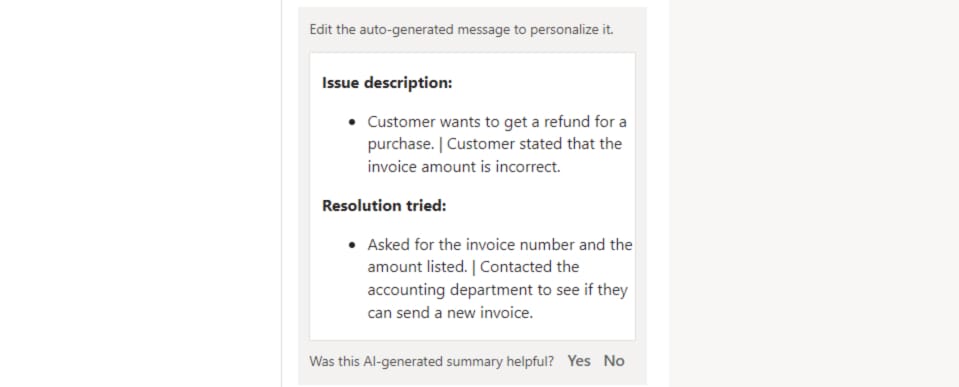

Human-in-the-loop

Human-in-the-loop (HITL) refers to a system where human oversight is integrated alongside automation. Humans review AI results, provide input, and correct errors, ensuring accuracy. This system increases in value when accuracy is needed. Accuracy isn’t paramount when picking your next recommended song, but it is critical when giving medical guidance or driving an autonomous car.

Microsoft's Dynamics 365 allows you to edit AI generated summaries.

Microsoft's Dynamics 365 allows you to edit AI generated summaries.

Another angle on HITL is co-creation. In this view, AI is not meant to replace all human activities, but rather it works alongside to empower us.

Ethical Considerations

Designing UX for AI also involves ethical considerations regarding data privacy, bias, and fairness. AI algorithms can inadvertently perpetuate biases in training data, leading to unfair outcomes. You’ve probably heard plenty of this in the news. Chances are, as a UX designer you are not the one training data models, but you will work with those who do.

With AI tech, will UX become irrelevant?

The foundation of designing any user experience, including AI, lies in understanding user needs and behaviors. AI doesn’t remove the need for understanding user needs. AI-powered applications should be designed with a deep understanding of the context in which they will be used and the specific tasks they are intended to assist with. AI is a technological solution but still needs to be applied to the right problems/opportunities your product is aiming to address. So in short, no. UX will not be irrelevant.

If you believe that simply embedding ChatGPT services in your product is enough to improve your customer experience, it's important to remember that everyone has access to OpenAI. To truly add value to your business, you need to have deep integrations with your product and specialized trained models. Ask yourself if you could achieve the same goals by using ChatGPT in another browser window. If the answer is yes, then you haven't created any value for your product. To create business value performance, cost, strategy, and experience will need to come together as you work through some trial and error.

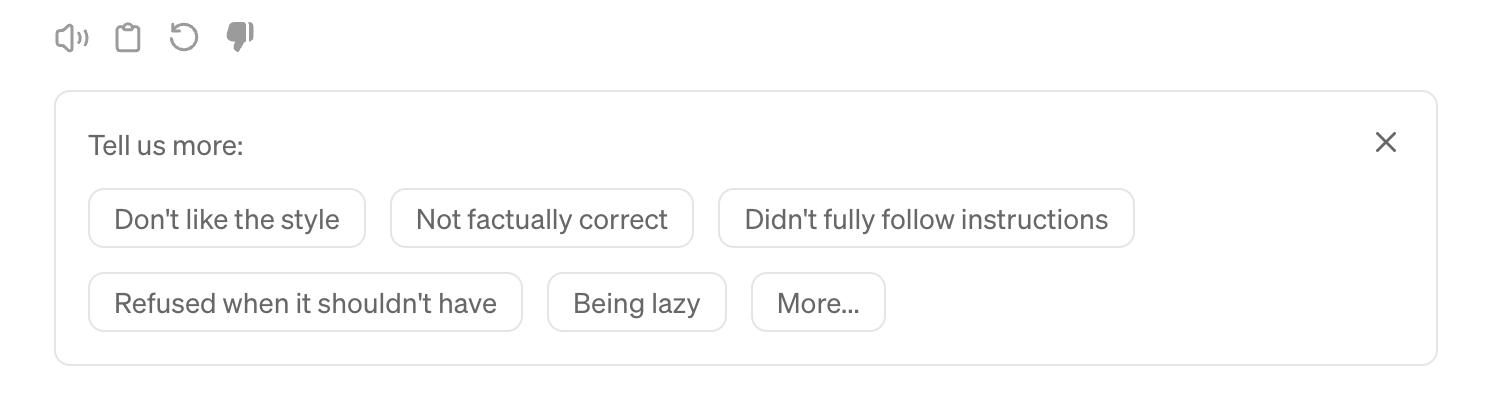

Tuning the Tuners, Getting feedback on results

Giving opportunities for users to provide feedback helps improve the accuracy and reliability of AI systems over time. Informed data science teams can improve and tweak to make your product better.

ChatGPT has many ways to give feebdack

ChatGPT has many ways to give feebdack

Resources

-

Shape of AI

I thank Emily Campbell for creating this new resource. It has been an influential hub that I send to designers. I think it still has some growing to do, but I love seeing these patterns all in one place! I HIGHLY recommend bookmarking this source.

shapeof.ai -

Invisible Machines Podcast, by UX Magazine

This podcast revolves more around forward thinking and philosophical direction than it does educational content for designers. The interviews can be a little disorganized/chatty. The hosts are heavy tech optimists and carry a few biases of their own, but I recommend listening in if you are looking for “what’s next”. They interview business-minded intelligent guests.

On Spotify -

Chris Ashby

Why voice input is flawed as a primary interface input mode

Up next

Designing for User Delight

Up next

Designing for User Delight